Section: New Results

Praxis and Gesture Recognition

Participants : Farhood Negin, Jeremy Bourgeois, Emmanuelle Chapoulie, Philippe Robert, François Brémond.

keywords: Gesture Recognition, Dynamic and Static Gesture, Alzheimer Disease, Reaction Time, Motion Descriptors

Challenges and Proposed Method

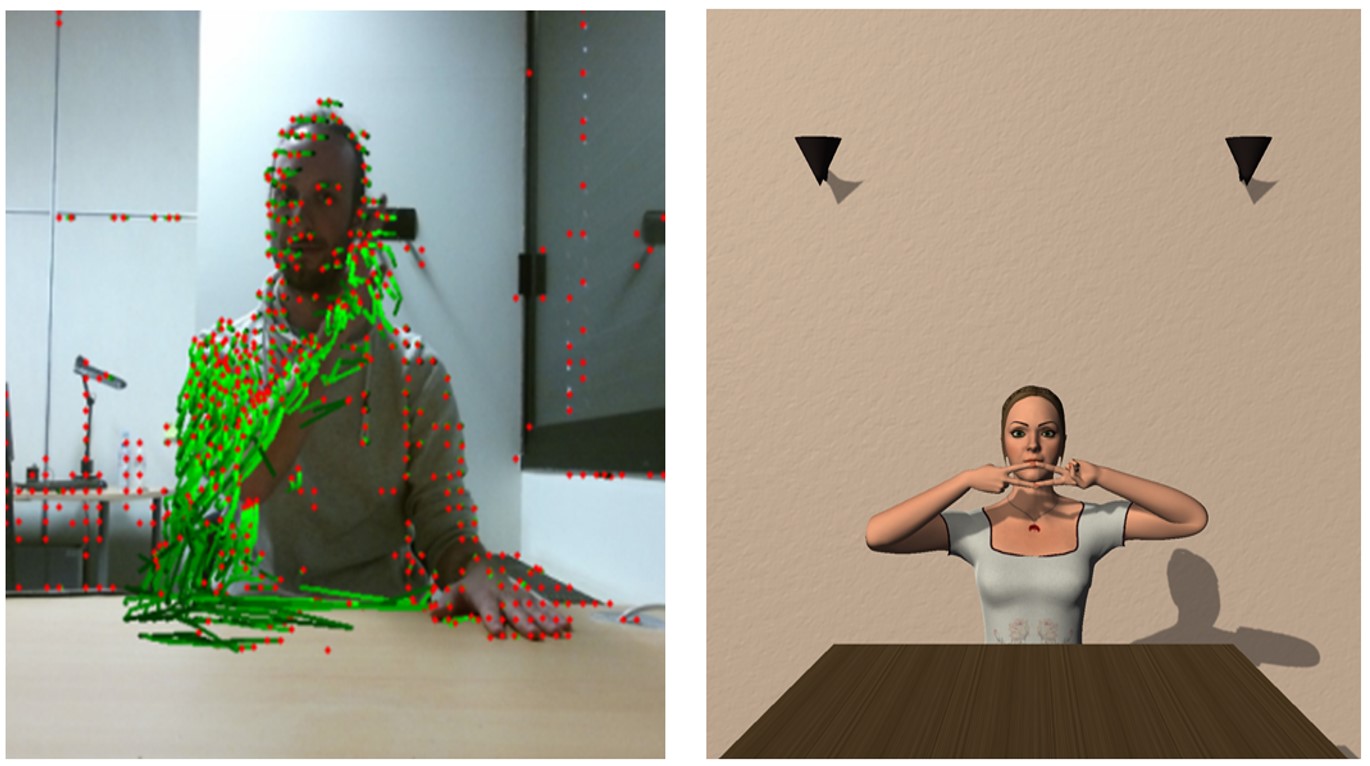

Most of the developed societies are experiencing an aging trend of their population. Aging is correlated with cognitive impairment such as dementia and its most common type: Alzheimer's disease. So, there is an urgent need to develop technological tools to help doctors to do early and precise diagnoses of cognitive decline. Inability to correctly perform purposeful skilled movements with hands and other forelimbs most commonly is associated with Alzheimerâs disease [84]. These patients have difficulty to correctly imitate hand gestures and mime tool use, e.g. pretend to brush one's hair. They make spatial and temporal errors. We propose a gesture recognition and evaluation framework as a complementary tool to help doctors to spot symptoms of cognitive impairment at its early stages. It is also useful to assess one's cognitive status. First, the algorithm classifies the defined gestures in the gestures set and then it evaluates gestures of the same category to see how well they perform compared to correct gesture templates. Methods Shape and motion descriptors such as HOG (histogram of oriented gradient) [61] and HOF (histogram of optical flow) [62] are an efficient clue to characterize different gestures (Figure 20 Left). Extracted descriptors are utilized as input to train the classifiers. We use bag-of-visual-words approach to characterize gestures with descriptors. The classification happens in two steps: first we train a classifier to distinguish different gestures and after, we train another classifier with correct and incorrect samples of the same class. This way, we could recognize which gesture is performed and whether it is performed accurately or not.

|

Experiments and Results

The framework is fed by input data which come from a depth sensor (Kinect, Microsoft). At first phase, the correct samples of gestures performed by clinicians, are recorded. We train the framework using correct instances of each gesture class. In the second phase, participants were asked to perform the gestures. We use virtual reality as modality to interact with subjects to make the experiments more immersive and realistic experience. First an avatar performs a specific gesture and then she asks the subject to repeat the same gesture (Figure 20 Right). In this work, we analyze two categories of gestures. First category is dynamic gestures where the whole motion of the hands is considered as a complete gesture. Second category of gestures is static gestures where only a static pose of hands is the desired gesture. For static gestures, we also need to detect this key frame. Moreover, reaction time which starts after avatar asked the subject to do the gesture, until subject really starts to perform the gesture, could be an important diagnostic factor. Our algorithm uses motion descriptors to detect key frames and reaction time. In the preliminary tests, our framework successfully recognized more than 80% of the dynamic gestures. It also detects key frames and reaction time with a high precision. Thus the proposed gesture recognition framework helps doctors by providing a complete assessment of gestures performed by subject.

This work is published in [30] and will appear in the Gerontechnology Journal.